Financial institutions are increasingly prioritizing improving data automation processes via technology in order to enhance efficiency and accuracy throughout the trade life cycle. With exponential growth in data volumes, particularly the massive increases in unstructured data and in regulatory requirements around data management and governance, financial institutions need to identify the best ways to cleanse, standardize and automate data as it moves downstream from the front to middle and back offices.

Many financial institutions continue to rely on proprietary systems, internal staff and a combination of automated and manual data-cleansing and reconciliation processes in their efforts to achieve the highest straight-through processing rate. Technology has often been used for only simple or limited solutions, but the time and cost of dealing with exceptions has, for the most part, been relatively low to justify this.

However, with the SE C’s shift t o T+1 for settlement planned f or May 2024, and the added complexity that comes with new asset classes and increased expectations from both regulators and investors, the difficulty of integrating this explosion of data into existing platforms is increasingly recognized as an important challenge.

The time is now to leverage robust, scalable technology that can facilitate the increased data needs of financial markets and accommodate a rapidly changing market environment linked to market conditions, regulatory shifts and ever-expanding data needs.

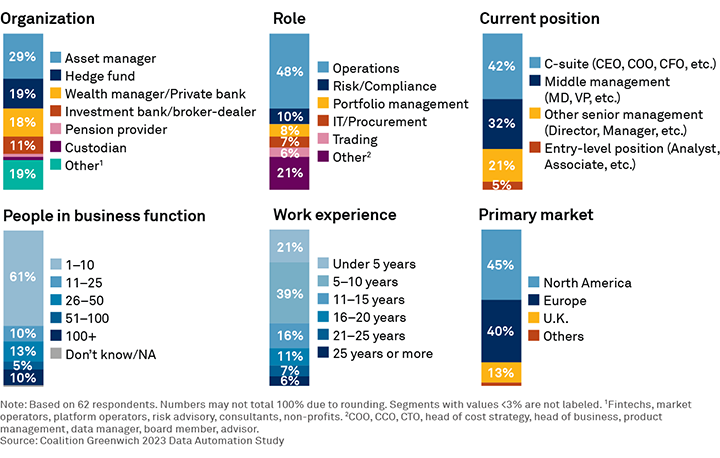

MethodologyCoalition Greenwich, in partnership with Xceptor, interviewed 62 capital markets professionals based in North America, the U.K. and Europe, mostly from the C-suite or in senior management positions. Seventy percent were buy-side professionals across asset managers, hedge funds, wealth managers, and custodians. The study focused on the challenges and advancements in middle- and back-office workflows, and the use of technology and the associated costs of managing these workflows.